Published in the journal Science, the results suggest an extended period of missed opportunity when intensive testing and contact tracing might have prevented SARS-CoV-2 from becoming established in North America and Europe.

The paper also challenges suggestions that linked the earliest known cases of COVID-19 on each continent in January to outbreaks detected weeks later, and provides valuable insights that could inform public health response and help with anticipating and preventing future outbreaks of COVID-19 and other zoonotic diseases.

"Our aspiration was to develop and apply powerful new technology to conduct a definitive analysis of how the pandemic unfolded in space and time, across the globe," said University of Arizona researcher Michael Worobey, who led an interdisciplinary team of scientists from 13 research institutions in the U.S., Belgium, Canada and the U.K. "Before, there were lots of possibilities floating around in a mish-mash of science, social media and an unprecedented number of preprint publications still awaiting peer review."

The team based their analysis on results from viral genome sequencing efforts, which began immediately after the virus was identified. These efforts quickly grew into a worldwide effort unprecedented in scale and pace and have yielded tens of thousands of genome sequences, publicly available in databases.

Contrary to widespread narratives, the first documented arrivals of infected individuals traveling from China to the U.S. and Europe did not snowball into continental outbreaks, the researchers found.

Instead, swift and decisive measures aimed at tracing and containing those initial incursions of the virus were successful and should serve as model responses directing future actions and policies by governments and public health agencies, the study's authors conclude.

How the Virus Arrived in the U.S. and Europe

A Chinese national flying into Seattle from Wuhan, China on Jan. 15 became the first patient in the U.S. shown to be infected with the novel coronavirus and the first to have a SARS-CoV-2 genome sequenced. This patient was designated 'WA1.' It was not until six weeks later that several additional cases were detected in Washington state.

"And while all that time goes past, everyone is in the dark and wondering, 'What's happening?'" Worobey said. "We hope we're OK, we hope there are no other cases, and then it becomes clear, from a remarkable community viral sampling program in Seattle, that there are more cases in Washington and they are genetically very similar to WA1's virus."

Worobey and his collaborators tested the prevailing hypothesis suggesting that patient WA1 had established a transmission cluster that went undetected for six weeks. Although the genomes sampled in February and March share similarities with WA1, they are different enough that the idea of WA1 establishing the ensuing outbreak is very unlikely, they determined. The researchers' findings indicate that the jump from China to the U.S. likely occurred on or around Feb. 1 instead.

The results also puts to rest speculation that this outbreak, the earliest substantial transmission cluster in the U.S., may have been initiated indirectly by dispersal of the virus from China to British Columbia, Canada, just north of Washington State, and then spread from Canada to the U.S. Multiple SARS-CoV-2 genomes published by the British Columbia Center for Disease Control appeared to be ancestral to the viral variants sampled in Washington State, strongly suggesting a Canadian origin of the U.S. epidemic. However, the present study revealed sequencing errors in those genomes, thus ruling out this scenario.

Instead, the new study implicates a direct-from-China source of the U.S. outbreak, right around the time the U.S. administration implemented a travel ban for travelers from China in early February. The nationality of the "index case" of the U.S. outbreak cannot be known for certain because tens of thousands of U.S. citizens and visa holders traveled from China to the U.S. even after the ban took effect.

A similar scenario marks the first known introduction of coronavirus into Europe. On Jan. 20, an employee of an automotive supply company in Bavaria, Germany, flew in for a business meeting from Shanghai, China, unknowingly carrying the virus, ultimately leading to infection of 16 co-workers. In that case, too, an impressive response of rapid testing and isolation prevented the outbreak from spreading any further, the study concludes. Contrary to speculation, this German outbreak was not the source of the outbreak in Northern Italy that eventually spread widely across Europe and eventually to New York City and the rest of the U.S.

The authors also show that this China-to-Italy-US dispersal route ignited transmission clusters on the East Coast slightly later in February than the China-to-US movement of the virus that established the Washington State outbreak. The Washington transmission cluster also predated small clusters of community transmission in February in California, making it the earliest anywhere in North America.

Early Containment Works

The authors say intensive interventions, involving testing, contact tracing, isolation measures and a high degree of compliance of infected individuals, who reported their symptoms to health authorities and self-isolated in a timely manner, helped Germany and the Seattle area contain those outbreaks in January.

"We believe that those measures resulted in a situation where the first sparks could successfully be stamped out, preventing further spread into the community," Worobey said. "What this tells us is that the measures taken in those cases are highly effective and should serve as a blueprint for future responses to emerging diseases that have the potential to escalate into worldwide pandemics."

To reconstruct the pandemic's unfolding, the scientists ran computer programs that carefully simulated the epidemiology and evolution of the virus, in other words, how SARS-CoV-2 spread and mutated over time.

"This allowed us to re-run the tape of how the epidemic unfolded, over and over again, and then check the scenarios that emerge in the simulations against the patterns we see in reality," Worobey said.

"In the Washington case, we can ask, 'What if that patient WA1 who arrived in the U.S. on Jan. 15 really did start that outbreak?' Well, if he did, and you re-run that epidemic over and over and over, and then sample infected patients from that epidemic and evolve the virus in that way, do you get a pattern that looks like what we see in reality? And the answer was no," he said.

"If you seed that early Italian outbreak with the one in Germany, do you see the pattern that you get in the evolutionary data? And the answer, again, is no," he said.

"By re-running the introduction of SARS-CoV-2 into the U.S. and Europe through simulations, we showed that it was very unlikely that the first documented viral introductions into these locales led to productive transmission clusters," said co-author Joel Wertheim of the University of California, San Diego. "Molecular epidemiological analyses are incredibly powerful for revealing transmissions patterns of SARS-CoV-2."

Other methods were then combined with the data from the virtual epidemics, yielding exceptionally detailed and quantitative results.

"Fundamental to this work stands our new tool combining detailed travel history information and phylogenetics, which produces a sort of 'family tree' of how the different genomes of virus sampled from infected individuals are related to each other," said co-author Marc Suchard of the University of California, Los Angeles. "The more accurate evolutionary reconstructions from these tools provide a critical step to understand how SARS-CoV-2 spread globally in such a short time."

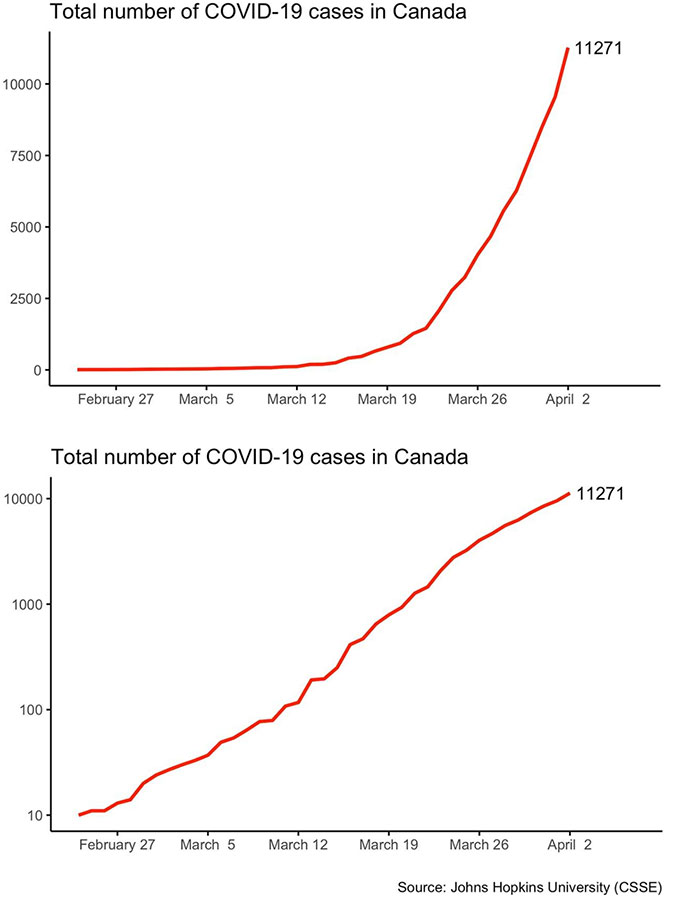

"We have to keep in mind that we have studied only short-term evolution of this virus, so it hasn't had much time to accumulate many mutations," said co-author Philippe Lemey of the University of Leuven, Belgium. "Add to that the uneven sampling of genomes from different parts of the world, and it becomes clear that there are huge benefits to be gained from integrating various sources of information, combining genomic reconstructions with complementary approaches like flight records and the total number of COVID-19 cases in various global regions in January and February."